It's not just Google — closer inspection reveals Bing's AI also flubbed the facts in its big reveal

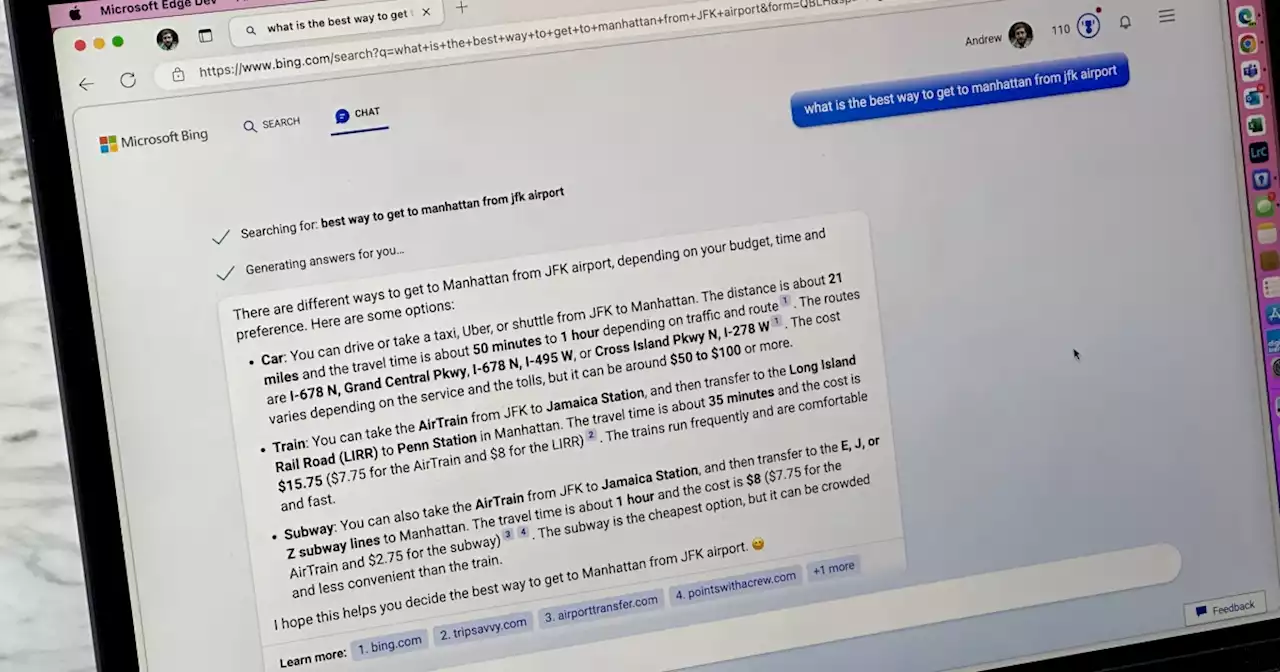

, Bing's AI was asked to list the pros and cons of the three best-selling pet vacuums. The chatbot made a list for the"Bissel Pet Hair Eraser Handheld Vacuum," with cons including its noise level and short cord.it linked to as a source, he noticed that that review didn't mention the vacuum's noisiness. Plus, it's cordless.In another example, Bing was prompted to create a five-day itinerary for a trip to Mexico City and was asked for nightlife suggestions.

After cross-referencing the bot's answers with his own research, Brereton found some of the descriptions the bot spit out were wrong. In one case, the bot recommended going to a bar's website to book a reservation and check out its menu — but neither reservations nor the menu are available on the bar's site. For two other bars, the bot said there were no reviews online. There are, in fact, hundreds for one and thousands for the other.

The most egregious mistake Bing made during its chatbot demo, Brereton said, was fabricating numbers after it was asked about the key takeaways fromThe technology mislabeled some of the numbers, like the adjusted gross margin, and other values, like diluted earnings per share, were"completely made up.

"We're aware of this report and have analyzed its findings in our efforts to improve this experience," a Microsoft spokesperson told Insider."We recognize that there is still work to be done and are expecting that the system may make mistakes during this preview period, which is why the feedback is critical so we can learn and help the models get better."asked Bing to list the movies showing in a particular London neighborhood.

Indonesia Berita Terbaru, Indonesia Berita utama

Similar News:Anda juga dapat membaca berita serupa dengan ini yang kami kumpulkan dari sumber berita lain.

Microsoft’s Bing AI, like Google’s, also made dumb mistakes during first demoMicrosoft says it’s learning from the feedback.

Microsoft’s Bing AI, like Google’s, also made dumb mistakes during first demoMicrosoft says it’s learning from the feedback.

Baca lebih lajut »

Google explains why it won't let you customize the look of Google Assistant on AndroidGoogle posted a support page revealing the reason why Assistant is available in Dark mode only on Android.

Google explains why it won't let you customize the look of Google Assistant on AndroidGoogle posted a support page revealing the reason why Assistant is available in Dark mode only on Android.

Baca lebih lajut »

Will You Be My GPT-Valentine? | HackerNoonDiscover the Valentine's plans of the biggest celebrities of our time! In this post, check out plans from Barack Obama to Taylor Swift, according to ChatGPT - artificialintelligence ai

Will You Be My GPT-Valentine? | HackerNoonDiscover the Valentine's plans of the biggest celebrities of our time! In this post, check out plans from Barack Obama to Taylor Swift, according to ChatGPT - artificialintelligence ai

Baca lebih lajut »

Microsoft's ChatGPT Bing search is rolling out to usersMicrosoft's ChatGPT Bing search service has started rolling out to users who registered - here's what you need to know.

Microsoft's ChatGPT Bing search is rolling out to usersMicrosoft's ChatGPT Bing search service has started rolling out to users who registered - here's what you need to know.

Baca lebih lajut »

Microsoft is already opening up ChatGPT Bing to the public | Digital TrendsMicrosoft has begun the public initial rollout of its Bing searchengine with ChatGPT integration after a media preview that was sent out last week.

Microsoft is already opening up ChatGPT Bing to the public | Digital TrendsMicrosoft has begun the public initial rollout of its Bing searchengine with ChatGPT integration after a media preview that was sent out last week.

Baca lebih lajut »

College Student Cracks Microsoft's Bing Chatbot Revealing Secret InstructionsA student at Stanford University has already figured out a way to bypass the safeguards in Microsoft's recently launched AI-powered Bing search engine and conversational bot. The chatbot revealed its internal codename is 'Sydney' and it has been programmed not to generate jokes that are 'hurtful' to groups of people or provide answers that violate copyright laws.

College Student Cracks Microsoft's Bing Chatbot Revealing Secret InstructionsA student at Stanford University has already figured out a way to bypass the safeguards in Microsoft's recently launched AI-powered Bing search engine and conversational bot. The chatbot revealed its internal codename is 'Sydney' and it has been programmed not to generate jokes that are 'hurtful' to groups of people or provide answers that violate copyright laws.

Baca lebih lajut »